Speeding Up Deep Learning Inference Using TensorFlow, ONNX, and NVIDIA TensorRT | NVIDIA Technical Blog

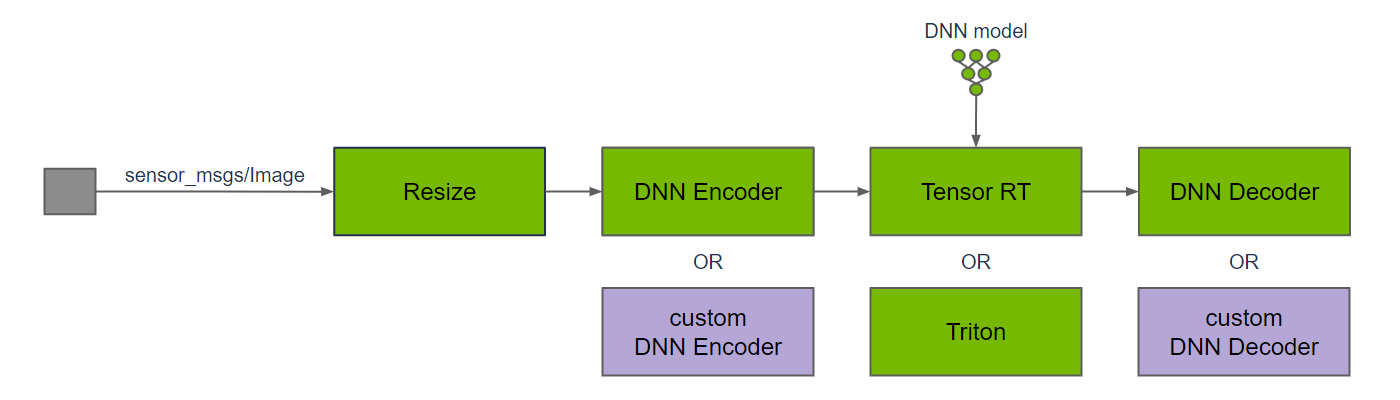

GitHub - NVIDIA-ISAAC-ROS/isaac_ros_dnn_inference: Hardware-accelerated DNN model inference ROS 2 packages using NVIDIA Triton/TensorRT for both Jetson and x86_64 with CUDA-capable GPU

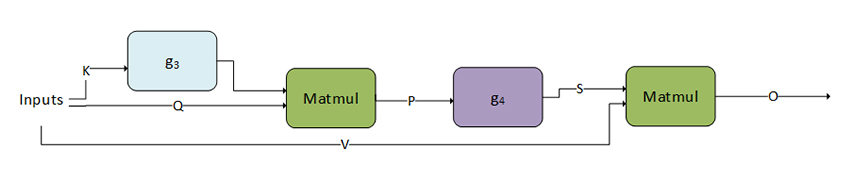

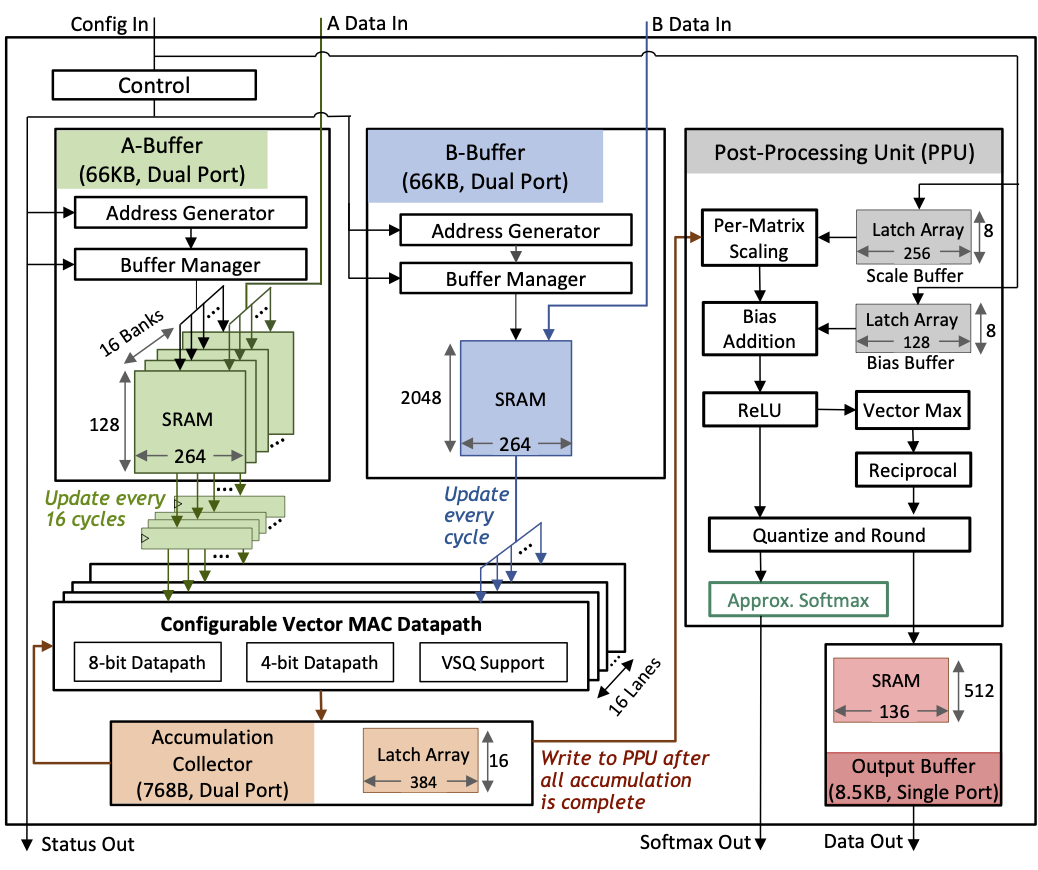

A 17–95.6 TOPS/W Deep Learning Inference Accelerator with Per-Vector Scaled 4-bit Quantization for Transformers in 5nm | Research

Benchmarking Deep Neural Networks for Low-Latency Trading and Rapid Backtesting on NVIDIA GPUs | NVIDIA Technical Blog

Discovering GPU-friendly Deep Neural Networks with Unified Neural Architecture Search | NVIDIA Technical Blog

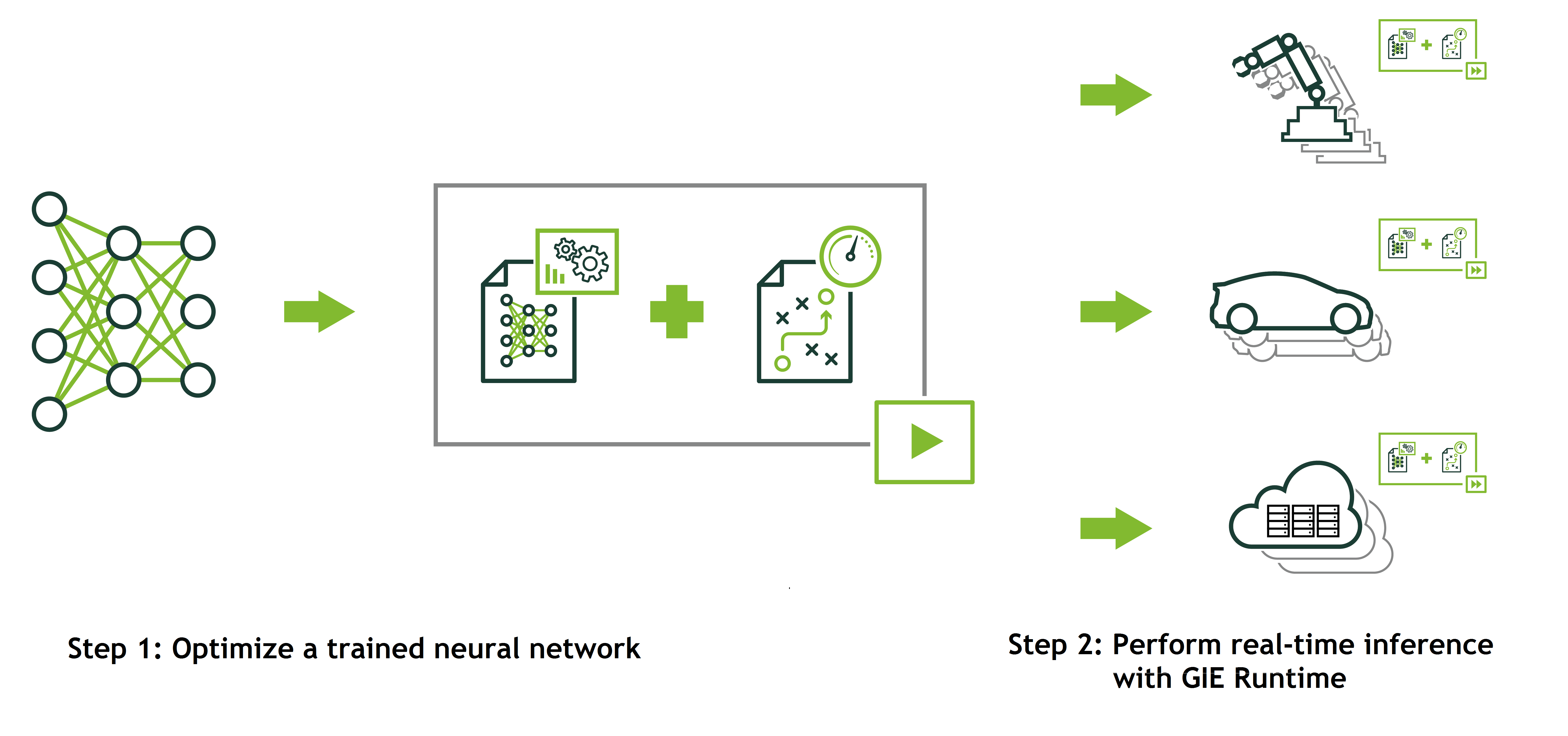

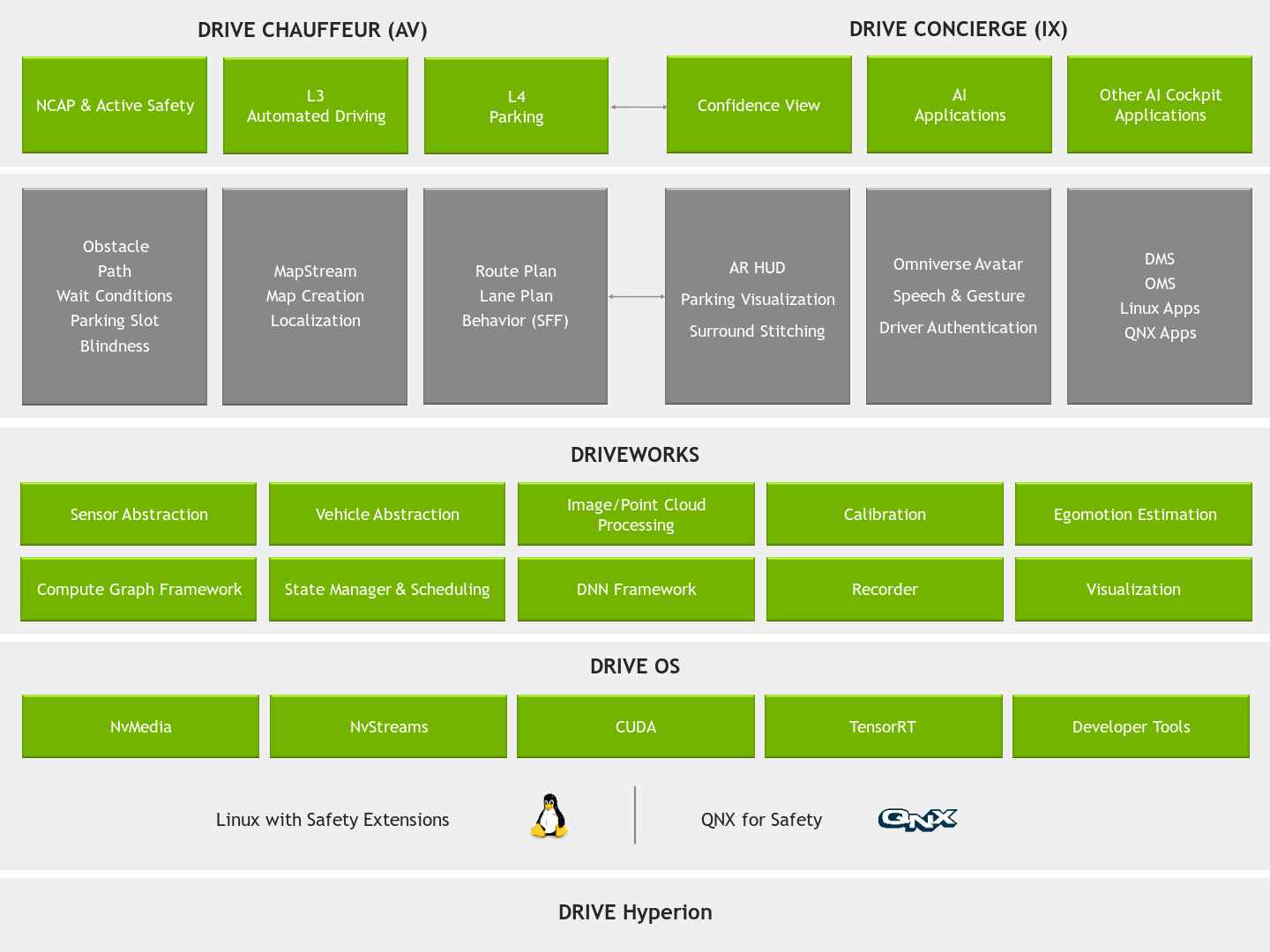

Integrating DNN Inference into Autonomous Vehicle Applications with NVIDIA DriveWorks SDK | NVIDIA On-Demand