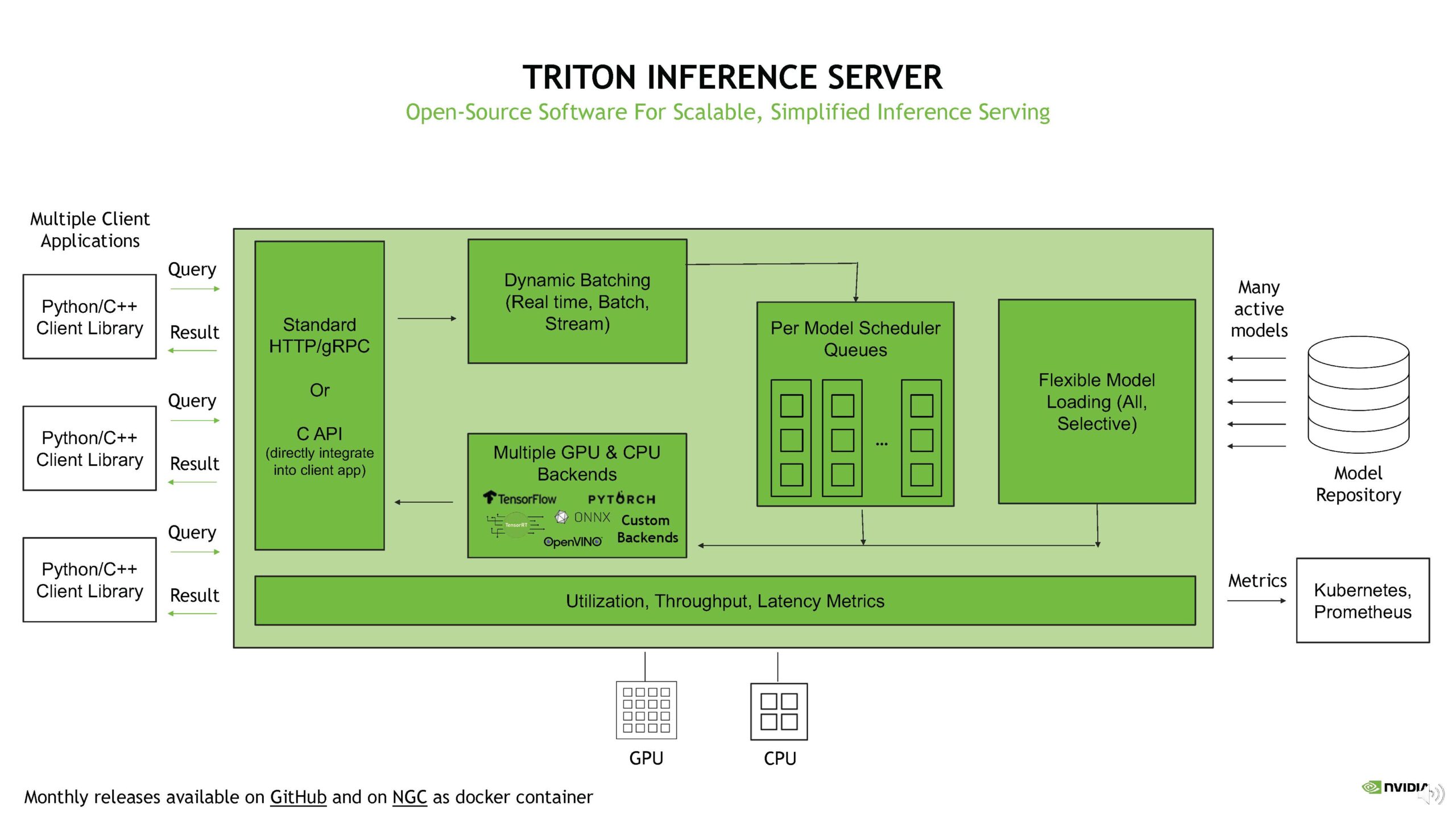

From Research to Production I: Efficient Model Deployment with Triton Inference Server | by Kerem Yildirir | Oct, 2023 | Make It New

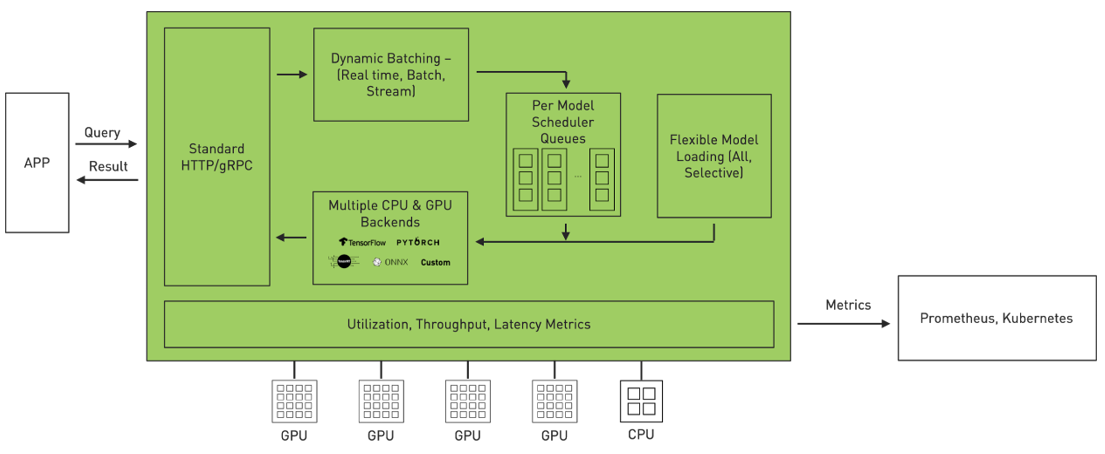

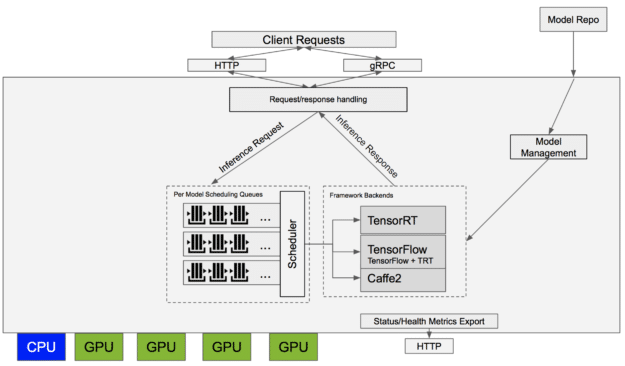

Achieve hyperscale performance for model serving using NVIDIA Triton Inference Server on Amazon SageMaker | AWS Machine Learning Blog

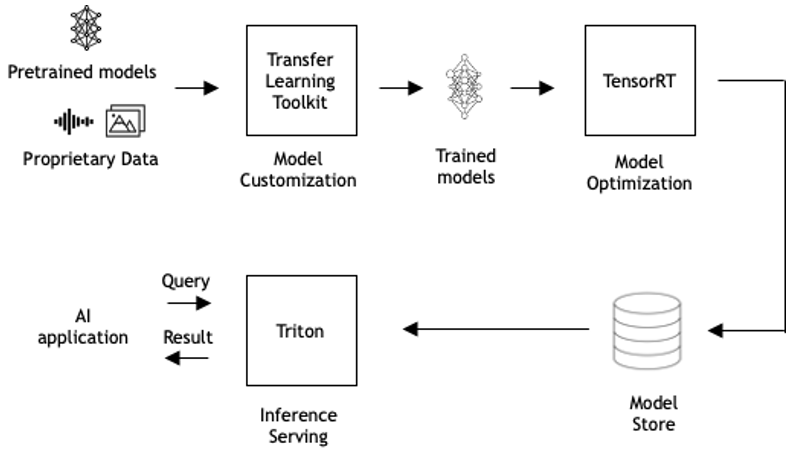

Building a Scaleable Deep Learning Serving Environment for Keras Models Using NVIDIA TensorRT Server and Google Cloud

Serving Inference for LLMs: A Case Study with NVIDIA Triton Inference Server and Eleuther AI — CoreWeave

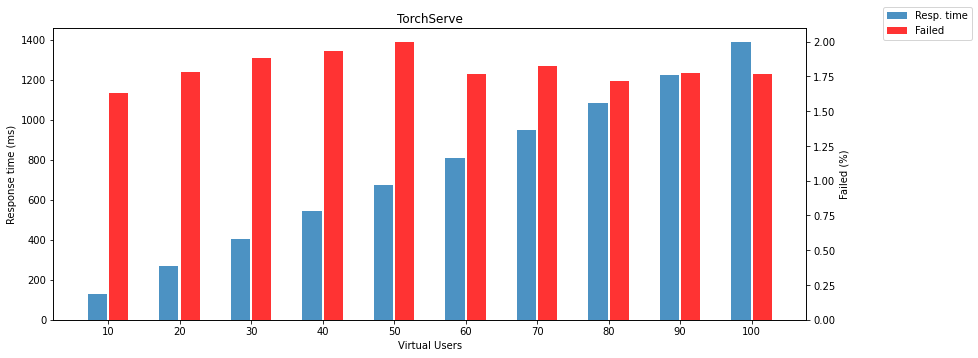

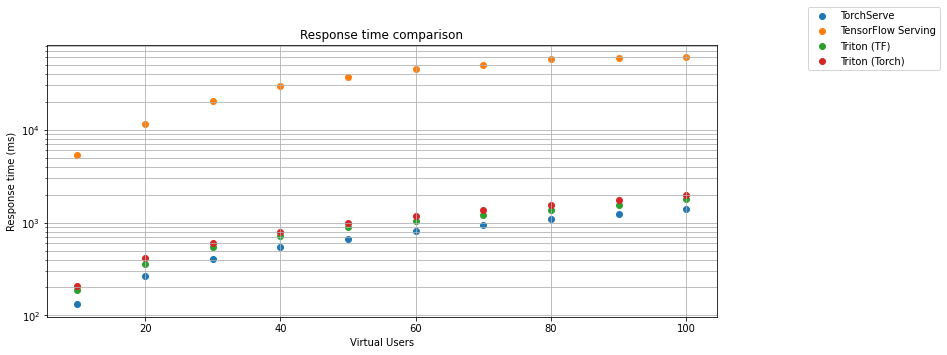

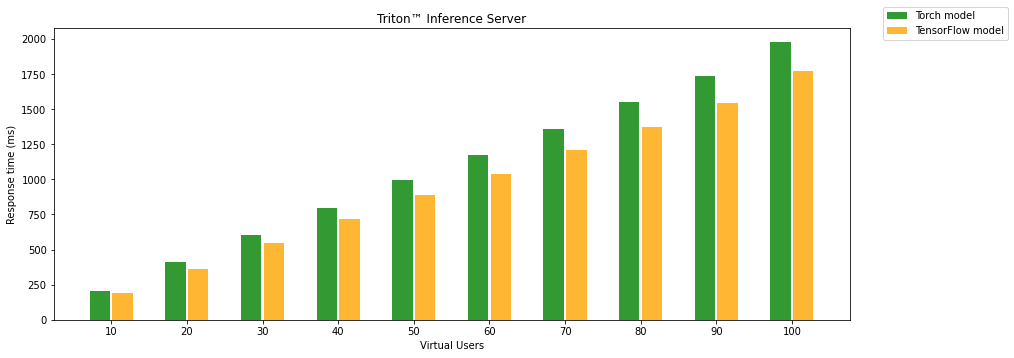

_01.jpg?width=600&name=TensorRT-and-Pytorch-benchmark-(batch-size-32)_01.jpg)